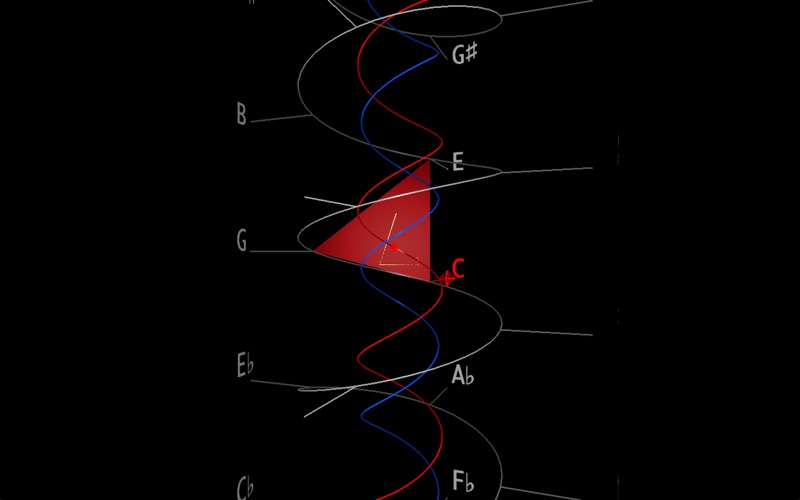

MuSA_RT animates a visual representation of tonal patterns - pitches, chords, key - in music as it is being performed.

MuSA_RT applies music analysis algorithms rooted in Elaine Chews Spiral Array model of tonality, which also provides the 3D geometry for the visualization space.

MuSA_RT interprets the audio signal from a microphone to determine pitch names, maintain shortterm and longterm tonal context trackers, each a Center of Effect (CE), and compute the closest triads (3-note chords) and keys as the music unfolds in performance.

MuSA_RT presents a graphical representations of these tonal entities in context, smoothly rotating the virtual camera to provide an unobstructed view of the current information.

MuSA_RT also offers an experimental immersive augmented reality experience where supported.